Running agentic search in production

Why Compliance and Operations Are Inseparable

Most AI systems treat compliance as a post-deployment problem: build the system, then figure out how to make it safe. In real estate, that approach doesn’t work. A single Fair Housing Act violation—steering a client based on race, religion, or familial status—can cost a brokerage millions in fines and destroy its reputation. Compliance isn’t optional, and it can’t be patched in after the fact.

This post walks through how we operate Rockhood’s agentic search system in production: the compliance architecture that prevents violations before they happen, the reliability patterns that keep latency predictable, the observability infrastructure that makes every decision traceable, and the production learnings that shaped our current design.

If you haven’t read the architecture post, start there. This article assumes you understand the agent loop and focuses on what happens when you actually run it at scale in a regulated industry.

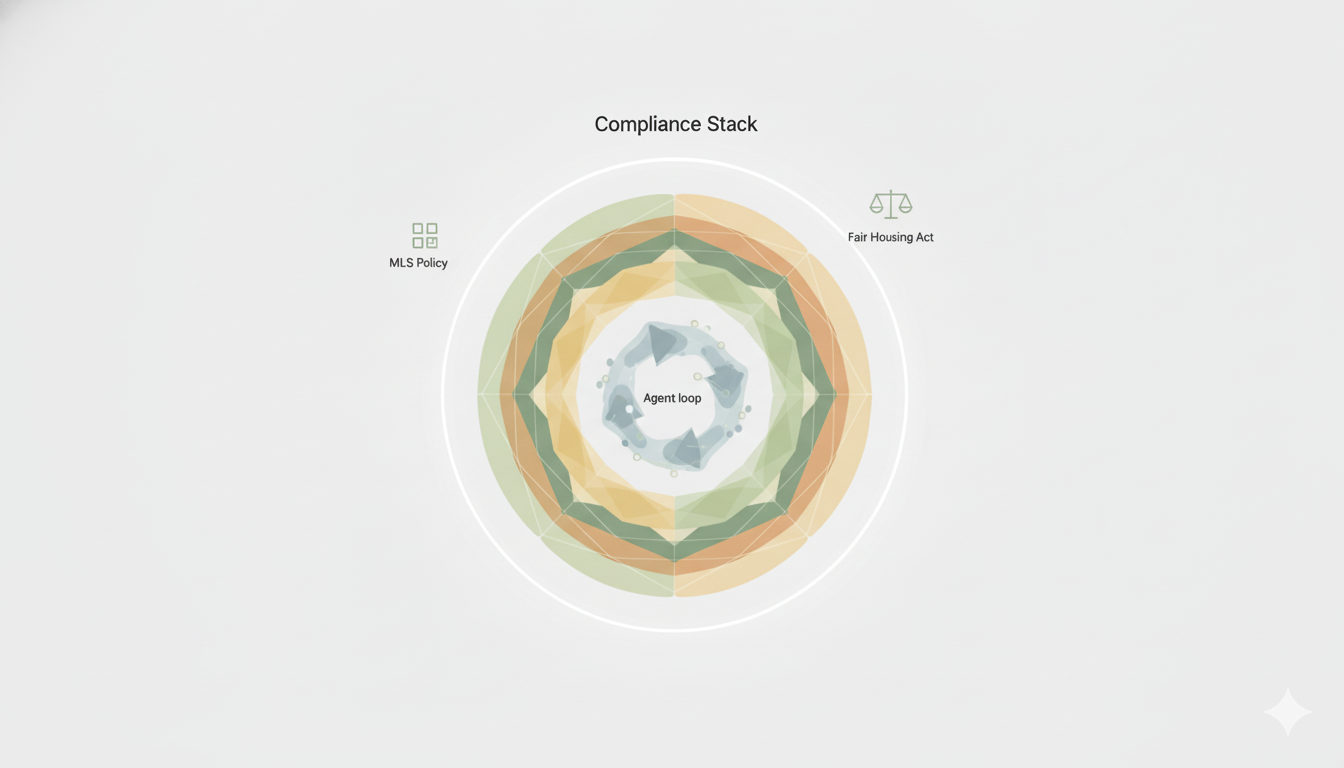

The Compliance Stack: Guardrails by Design

Real estate AI faces unique compliance challenges. The Fair Housing Act prohibits discrimination based on seven protected classes: race, color, religion, national origin, sex, familial status, and disability. MLS policies prohibit exposing certain seller-side data (like seller motivation or days-to-close incentives). NAR guidelines require audit trails for AI-generated content shared with clients.

We can’t just fine-tune a model and hope it learns compliance. Language models absorb biases from training data and can generate subtle violations (“great for families” → familial status discrimination). Our approach: guardrails by design—compliance filters built into the architecture, not bolted on afterward.

Layer 1: Protected Class Filtering

Before the LLM generates a response, we scan the evidence context for risky signals. If the user’s query contains protected class references (“Show me homes near churches” → religion), we flag it for clarification rather than proceeding.

After generation, we run the output through a multi-stage filter:

- Keyword scanner: Catches explicit protected class terms (synagogue, mosque, elementary school)

- Contextual classifier: Detects subtle violations (“quiet neighborhood perfect for retirees” → age/familial status)

- Phrasing controls: Rewrites risky language to neutral alternatives

Here’s a real example. A user asks: “What neighborhoods in Seattle are good for families with young kids?”

The naive response might be: “Queen Anne has excellent elementary schools and lots of young families.” This is a Fair Housing violation—it references familial status (young kids) and implies steering based on that characteristic.

Our guardrails rewrite it: “Queen Anne has a mix of housing types and public schools. I can show you recent sales data and market trends to help you evaluate options.”

The key difference: we provide factual data (sales prices, DOM, inventory) without making judgments about “suitability” based on protected classes. The agent can help analyze the market; they make the decision.

Layer 2: MLS Policy Enforcement

Every MLS has different data usage policies. NWMLS prohibits exposing “seller motivation” or “special terms.” CRMLS restricts how listing agent contact info can be displayed. We enforce these at the connector level—before data even enters the evidence builder.

Each MLS connector implements a per-MLS allowlist: only approved fields (address, price, beds, baths, sqft, DOM, photos) are passed downstream. If a query asks for restricted data (“Why is the seller motivated?”), the planner refuses and explains the limitation.

This isn’t just defensive—it protects us contractually. MLS violations can result in license revocation, which would kill the product. By enforcing policies in code, we make violations structurally impossible.

Layer 3: Agent Approval Gates

Not all content requires human review. Market stats, comparable sales, and property searches are low-risk—they’re factual MLS data. But some outputs need approval:

- Listing descriptions (risk: steering language)

- Client email campaigns (risk: personalization based on protected classes)

- Custom market reports (risk: cherry-picking data to favor certain demographics)

When the system generates these, we flag them for agent review before sending. The agent sees the raw output, the evidence it’s based on, and a compliance checklist (“Does this reference protected classes? Does this make subjective judgments about neighborhoods?”).

This creates accountability. If a violation slips through, we have an audit trail showing who reviewed and approved it.

Layer 4: Audit Trails and Logging

Every query, every generated response, and every compliance decision is logged. We retain:

- Query traces: User input, planner decisions, tool calls, MLS responses

- Evidence snapshots: What data was available when the decision was made

- Compliance flags: What filters triggered, what text was rewritten, who approved it

- Retention policy: 7 years (NAR recommendation for compliance audits)

When a Fair Housing complaint comes in—and in real estate, they will—we can reconstruct exactly what the system did and why. This isn’t paranoia; it’s operational necessity.

Performance and Reliability: Hitting Latency Targets at Scale

We target 2–10 seconds for typical queries. That’s a wide range, but real estate queries vary wildly: “median price in Seattle” is fast (cached aggregate), while “top 10 comps for this specific property” is slow (parallel MLS searches, ranking, generation).

Here’s how we hit those targets consistently.

Parallelization with Timeouts and Circuit Breakers

The planner issues multiple MLS queries in parallel (sold listings, active listings, market stats). Each has a per-tool timeout (3 seconds) and a circuit breaker (if 3 consecutive failures, skip that MLS for 60 seconds).

Why timeouts? MLS APIs are unpredictable. NWMLS usually responds in 500ms, but sometimes takes 5+ seconds. Without timeouts, a single slow query blocks the entire response. With timeouts, we proceed with partial results—if NWMLS times out but CRMLS succeeds, we return CRMLS data and note the gap.

Why circuit breakers? If an MLS is down or rate-limiting us, retrying every query wastes time and burns rate limits. Circuit breakers detect persistent failures and fail fast, improving latency for subsequent requests.

Real numbers from production:

- P50 latency: 2.4 seconds (most queries hit cache or are simple searches)

- P95 latency: 6.8 seconds (complex comp analyses with multi-MLS queries)

- P99 latency: 9.2 seconds (worst case: cache miss + slow MLS + large evidence context)

These are measured end-to-end: user input → final response with citations.

Intelligent Caching: Staleness vs. Speed

We cache common aggregates (median price, average DOM, inventory counts) with a 15-minute TTL. This trades freshness for speed. Real estate markets don’t move that fast—yesterday’s median price is usually fine for decision-making.

Cache hit rates:

- Market stats queries: 78% (most users ask similar questions)

- Comparable sales: 23% (specific properties rarely repeat)

- Active listings: 12% (users want real-time inventory)

The cache is key-aware: median_price(location=Seattle, days=90) gets a different cache entry than median_price(location=Seattle, days=30). This prevents stale aggregates from bleeding across different time windows.

Cache invalidation: When we fetch fresh MLS data, we invalidate related cache entries. New sales in Queen Anne? Invalidate market_stats(location=Queen Anne, ...). This keeps the cache eventually consistent without manual flushing.

Context Compaction: Fitting Evidence into Token Budgets

LLMs have token limits. GPT-4 has 128k tokens, but that includes input (evidence + prompt) and output (generated answer). We need to fit MLS data, aggregates, and instructions into ~8k tokens to leave room for the response.

For a comp analysis with 10 listings, raw MLS data is ~15k tokens (every field, verbose addresses, timestamps). We compact it:

- Drop unused fields (listing agent contact, MLS remarks, internal IDs)

- Abbreviate addresses (“123 Queen Anne Ave N” → “123 QA Ave N”)

- Round aggregates (median DOM: 11.847 → 12)

- Deduplicate repeated values (if all listings are 4 beds, mention it once)

This gets us to ~3k tokens without losing accuracy. The tradeoff: lower token costs and faster generation (fewer tokens to process).

Graceful Degradation: When Things Go Wrong

MLSs fail. APIs time out. Ranking bugs happen. We don’t crash—we degrade gracefully.

If the planner can’t extract constraints: Ask clarifying questions instead of guessing. If all MLS queries time out: Return cached aggregates and explain the limitation. If ranking fails: Return unranked results and let the agent sort manually. If compliance filters block the output: Escalate for human review instead of generating unsafe content.

Every failure mode has a defined fallback. This keeps the system operable even when degraded.

Observability: What We Measure and Why

Production systems without observability are black boxes. When latency spikes or a query fails, we need to diagnose the root cause in minutes, not hours.

Query Tracing: End-to-End Visibility

Every query gets a trace ID that follows it through the system: planner → MLS connectors → ranking → evidence builder → LLM → guardrails → response. At each stage, we log:

- Timing: How long did this phase take?

- Decisions: What did the planner choose? What constraints were extracted?

- Data volume: How many MLS results? How many tokens in the evidence?

- Failures: Which MLSs timed out? Which filters triggered?

When a query takes 15 seconds instead of 3, we pull the trace and see: “NWMLS timed out after 3s, CRMLS succeeded in 1.2s, ranking took 2.8s (anomaly: usually <500ms), LLM generation took 7.1s (large evidence context).”

Now we know where to optimize: the ranking step is slow, probably due to a deduplication bug.

Evidence Diffs: Detecting Non-Deterministic Behavior

MLS data changes constantly. If we retry a query 5 minutes later, we might get different results (new listings, price reductions). That’s expected. But if we retry immediately and get different results, something’s wrong—maybe the ranking algorithm is non-deterministic.

We log evidence snapshots for every query. If a user retries the same question, we compare the evidence:

- Same listings, same order → deterministic (good)

- Same listings, different order → ranking bug (investigate)

- Different listings → MLS data changed (expected)

This catches subtle bugs early. In one case, we discovered that our similarity scoring used floating-point arithmetic that varied slightly across server instances, producing different rankings. We fixed it by switching to integer-based scoring.

Redaction and PII Minimization

Logs contain user queries, MLS data, and generated responses. Some of this is personally identifiable information (PII): addresses, listing agent names, user emails. We can’t store this forever without compliance risk.

Our logging pipeline:

- Log everything in hot storage (7 days)

- Redact PII and archive to cold storage (7 years)

- Aggregate metrics without PII (median latency by query type, cache hit rates, filter trigger rates)

Redaction replaces specific values with placeholders: “123 Queen Anne Ave N” → “[ADDRESS]”. This keeps traces useful for debugging while minimizing compliance exposure.

Security and Data Handling: Why We License Instead of Scrape

Most AI companies scrape the web and train on it. Real estate doesn’t work that way. MLS data is licensed, not public. Scraping violates contracts and gets you sued. Training on MLS data without permission violates licensing agreements.

We made a deliberate choice: licensed API access, no scraping, no training on MLS data. Here’s why.

Contractual Obligations

Every MLS integration has a contract specifying:

- Scope: What data we can access (sold listings, active listings, photos)

- Retention: How long we can store it (usually no more than cache TTLs)

- Usage: What we can do with it (display to users, generate analytics, not train models)

- Rate limits: How many requests per second (varies by MLS)

Violating these means license revocation, which kills the product. By treating MLSs as external APIs—query on demand, cache minimally, never train—we stay compliant.

The Cost: Latency and Rate Limits

This choice has costs. API calls add latency (500ms–2s per MLS). Rate limits force us to batch and parallelize carefully. Cache TTLs mean we can’t store MLS data long-term.

But the alternative—scraping and fine-tuning—would expose us to legal risk and make compliance impossible. You can’t audit a fine-tuned model to prove it didn’t leak protected class biases from scraped listings.

Encryption and Key Rotation

MLS credentials (API keys, OAuth tokens) are encrypted at rest using AWS KMS. We rotate keys every 90 days. Access follows principle of least privilege: only the MLS connectors have credentials, and they’re scoped to specific read permissions.

Logs are encrypted in transit (TLS 1.3) and at rest (AES-256). This isn’t just security theater—MLS audits check for these controls.

Production Learnings: What Surprised Us

Here’s what we didn’t expect when we first deployed this system.

The hardest queries aren’t the complex ones. We thought comp analyses would be the toughest. They’re not—they have clear constraints and well-defined ranking logic. The hardest queries are ambiguous ones: “What’s the market doing in North Seattle?” North Seattle could mean 10+ neighborhoods. “Market” could mean prices, inventory, DOM, or trends. We’ve gotten better at clarifying instead of guessing, but it’s still the biggest source of user friction.

Cache invalidation is harder than we thought. We started with simple TTL-based caching. Then we realized: if a user searches for “Queen Anne sold listings” at 9am and again at 9:05am, they expect to see the same results. But if there’s a new sale at 9:02am, the cache is stale. We added smarter invalidation (trigger on MLS webhook events), but it’s still not perfect. Some MLSs don’t expose webhooks, so we fall back to TTL.

Compliance is a moving target. Fair Housing regulations don’t change often, but MLS policies do. CRMLS added new data usage restrictions mid-deployment. We had to update connectors to block fields we were previously allowed to show. This taught us: never hard-code compliance logic. Store allowlists in configuration, not code, so we can update without redeploying.

Observability pays for itself. We initially skipped detailed query tracing to save on storage costs. Bad decision. When latency spiked, we had no visibility into why. After adding tracing, we found the root cause (a slow MLS connector) in 10 minutes instead of 3 hours. The storage cost (~$200/month) is negligible compared to engineering time.

Users care more about citations than we expected. We thought clickable listing IDs were a nice-to-have. They’re the feature agents mention most. Being able to verify every comp with one click builds trust in a way that generic “AI-powered” marketing never could.

Scaling Considerations: What’s Next

Right now, we operate in Washington and California. Expanding to new markets means:

- New MLS integrations (each with different schemas, policies, rate limits)

- Geographic data normalization (“Queen Anne” in Seattle ≠ “Queen Anne” in Maryland)

- Compliance variations (some states have stricter protected class definitions)

We’re not there yet, but the architecture is designed for it. MLS connectors are pluggable. Guardrails are configurable per-market. Evidence builders normalize schemas before downstream processing.

The bottleneck won’t be technical—it’ll be legal and operational. Each MLS requires contract negotiation, compliance review, and policy tuning. That doesn’t scale automatically.

Closing Thoughts: Lessons for Regulated AI

If you’re building AI for regulated industries—healthcare, finance, legal—here’s what we learned:

Compliance first, not compliance later. You can’t patch Fair Housing Act adherence into a fine-tuned model. Build guardrails into the architecture from day one.

Observability is non-negotiable. In regulated domains, “the model did something unexpected” isn’t an acceptable explanation. You need traceability for every decision.

Graceful degradation beats perfection. APIs will fail. MLSs will time out. Your system should degrade gracefully rather than crashing or hallucinating.

Security and compliance go together. Encryption, access controls, and audit logs aren’t just InfoSec requirements—they’re compliance requirements.

Users value accuracy over fluency. Real estate agents would rather wait 5 seconds for a verified answer than get an instant hallucination. Build for their needs, not for demo day.

Running agentic AI in production is different from building it. The architecture matters, but so does the operational discipline: compliance by design, observability from day one, and graceful handling of the inevitable failures. That’s how you go from prototype to production in a regulated industry.

This post is part of a series on building MLS-grounded AI. Read the architecture post for details on how the agent loop works.